A landmark ruling by the UK High Court has largely sided with AI company Stability AI in a major copyright lawsuit brought by Getty Images, raising significant concerns among artists and creators about the future protection of their work. Judge Joanna Smith ruled that training an AI model on copyrighted works without storing or reproducing those works in the model itself does not constitute secondary copyright infringement under UK law.

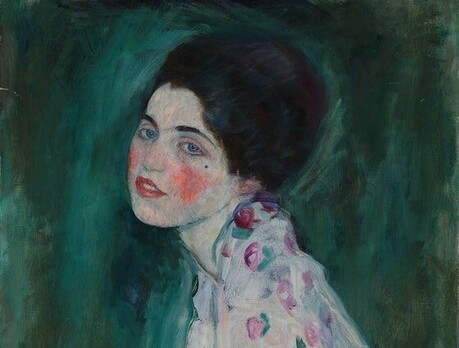

Getty Images had sued Stability AI, claiming the company committed secondary copyright infringement by training its Stable Diffusion AI image model on millions of images from Getty's stock photo library without permission. The lawsuit gained attention when Getty watermarks appeared on AI-generated images, providing clear evidence that the company's photos had been used in the training process. Stable Diffusion, first released in August 2022, has undergone several updates and remains one of the most widely used AI image generation tools.

Despite acknowledging that Getty's copyrighted images were used in training, Judge Smith ruled decisively in favor of Stability AI on the main copyright claims. "An AI model such as Stable Diffusion which does not store or reproduce any copyright works (and has never done so) is not an infringing copy," the judge stated. However, the court did side with Getty on certain trademark infringement claims related to watermarks, suggesting that AI developers could face legal action if their models clearly reproduce trademarks.

Legal experts are divided on the implications of this ruling for the creative industry. Simon Barker, Partner and Head of Intellectual Property at law firm Freeths, believes the judgment "strikes a balance between protecting the interests of creative industries and enabling technological innovation." He noted that while AI developers can take some comfort that training on large datasets won't automatically expose them to copyright liability in the UK, they still risk trademark infringement if AI-generated outputs reproduce protected marks in confusing ways.

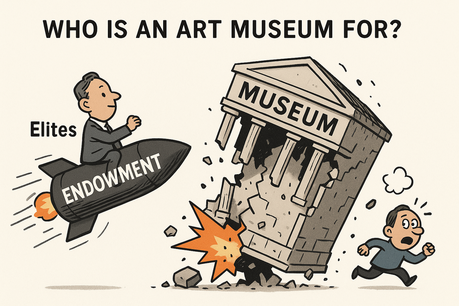

However, other legal professionals view the ruling as a significant setback for artists' rights. Rebecca Newman, a legal director at Addleshaw Goddard, warned that the decision "is a blow to copyright owners' exclusive right to profit from their work" and demonstrates that "the UK's secondary copyright regime is not strong enough to protect its creators." She expressed concern that the ruling undermines the fundamental principle that creators should have control over how their work is used commercially.

James Clark, Data Protection, AI and Digital Regulation partner at Spencer West LLP, highlighted the practical challenges this ruling creates for the creative industry. "The judgment usefully highlights the problem that the creative industry has in bringing a successful copyright infringement claim in relation to the training of large language models," he explained. Clark noted that during the training process, AI models don't make copies of works or reproduce them directly, but rather "learn" from them in a way similar to human learning.

The technical aspects of how AI models work played a crucial role in the court's decision. An expert report quoted in the judgment explained that "diffusion models learn the statistics of patterns which are associated with certain concepts found in the text labels applied to their training data, i.e., they learn a probability distribution associated with certain concepts" rather than storing the actual training data. This technical distinction proved pivotal in the court's reasoning.

Despite the ruling, significant questions remain unresolved. Getty withdrew part of its lawsuit relating to primary copyright infringement because Stability AI successfully argued that its AI training had not occurred within British jurisdiction. The company still has another case against Stability AI pending in US courts, where different legal standards may apply. This jurisdictional complexity means the UK ruling may not be the final word on the matter.

Nathan Smith, IP partner at Katten Muchin Rosenman LLP, cautioned that while the ruling may appear to provide clarity, "there remains significant uncertainty." He noted that "the Court's findings on the more important questions regarding copyright infringement were constrained by jurisdictional limitations, offering little insight on whether training AI models on copyrighted works infringes intellectual property rights." Smith argued that the judgment "arguably leaves the legal waters of copyright and AI training as murky as before."

The ruling comes amid broader debates about AI and copyright protection. Current legal precedent holds that AI-generated images cannot be copyrighted themselves because they lack human authorship, creating additional complexity in the relationship between AI technology and intellectual property law. This means that while AI companies may be protected when training on existing works, the outputs they generate receive no copyright protection.

Getty Images expressed disappointment with the outcome and called for stronger regulatory measures. In a statement, the company said: "We remain deeply concerned that even well-resourced companies such as Getty Images face significant challenges in protecting their creative works given the lack of transparency requirements. We invested millions of pounds to reach this point with only one provider that we need to continue to pursue in another venue."

The company urged governments, including the UK, to "establish stronger transparency rules, which are essential to prevent costly legal battles and to allow creators to protect their rights." This call for regulatory intervention reflects growing sentiment in the creative community that existing copyright laws are inadequate to address the challenges posed by AI technology.

For artists and creators, this ruling represents a significant setback in their efforts to maintain control over how their work is used to train AI systems. Many in the creative community view AI training on unlicensed work as theft, but this legal decision suggests that current UK copyright law may not provide the protection they seek. The ruling could influence similar cases and policy decisions both in the UK and internationally, potentially shaping the future relationship between AI development and artists' rights.